Beyond Prompts: The Future of AI Copilots

Looking beyond prompts and outputs to truly conversational experiences.

AI as conversational agents is not a new thing. We have seen AI assistants like Siri, Alexa, Cortana, and Google Assistant for many years now. However, these assistants have always had a limit to their interactivity — these tools are often about doing one specific task that is already well-defined.

But with the rise of LLMs, we might finally be able to extract something meaningful from the AI assistants, where these tools can do more than defined tasks. Let’s think about what the close future of Human-AI interaction might look like, specifically with assistants and copilots.

With generative AI, we can already see this capability to create meaningful things that are not defined and limited. It is exciting to think about Microsoft’s idea of “co-pilot“ where these tools augment our abilities to do things better, whether professionally or personally. And I see the number of incredible AI tools built on the OpenAI GPT models, the plugins within ChatGPT, and improved search engines. Yet, all of these tools are still tools and don’t truly feel like a copilot to my everyday life. What’s next?

Multimodality

You can go to ChatGPT to generate text, to MidJourney to generate images, to GitHub to generate code, but you still have to do a lot of copy pasting to really have some use of all this generated content. The big reason for this limitation is the lack of interoperability between Copilots. Multimodal assistants are probably the immediate next step — processing, understanding, and generating output of more than one data type. For instance, Bing can now be prompted with images. We will probably see this soon in a lot of other search engines and AI generators.

Collaborative Execution

Most, if not all of these new generative AI experiences are driven by prompts. If you have used ChatGPT or other generative AI tools, you know that the output is very dependent on the kind of prompt you can write. And while there are a lot of issues with this kind of interaction, I want to focus on how you don’t have any control after you have initiated the prompt request.

Something that we often hear about a good conversation, is good listening. But these generative copilots seem to be missing exactly that. Once you prompt the copilot to do something, they generate what they best understand (or don’t understand) from that one prompt. If the outcome is not what you desired, you reframe the prompt and run it again. So, it’s not really a conversation but rather a button you press to get an outcome, just in better formed sentences that sounds personalized.

It really becomes a conversation with the next level of personalization. Instead of prompting, you speak. You ask your copilot to do something and before it is executed, the copilot asks questions back to you to deliver the right outcome in just the right way. Instead of trial and error to get the right prompt and the right content, it is a full ongoing conversation with your copilot to finetune all the details. This will be a more efficient way of working with your AI assistants in executing collaboratively.

AI-Led Experiences

The other challenge interacting with these new AI tools today, is that they are mostly domain specific. The reason here is that we are embedding these Copilots into our current experiences, rather than creating new experiences that are centered around AI.

Many AI assistants, even with LLMs, are being placed inside existing applications like Copilot in Teams and Outlook, or summarization within Zoom or other video conferencing tools. But, there is much innovation to happen in AI serving outcomes and not just outputs.

With AI-led experiences, copilots would not only help with text, images, or other media outputs, but create end-to-end artifacts. For example, if you would want to open a new bakery, you won’t just use a copilot for planning recipes, creating a logo, and getting body copy for your website, but the copilot itself would create recipes, build the fully functional website, and run a marketing campaign.

These AI led experiences won’t be embedded in any of our everyday tools. But the tools would be embedded in the AI to create complete ecosystems. Thinking of ecosystems, imagine Siri using all Apple ecosystem to support your everyday work and more. Static applications and documents would seem boring very soon, and everything will be smart and fluid.

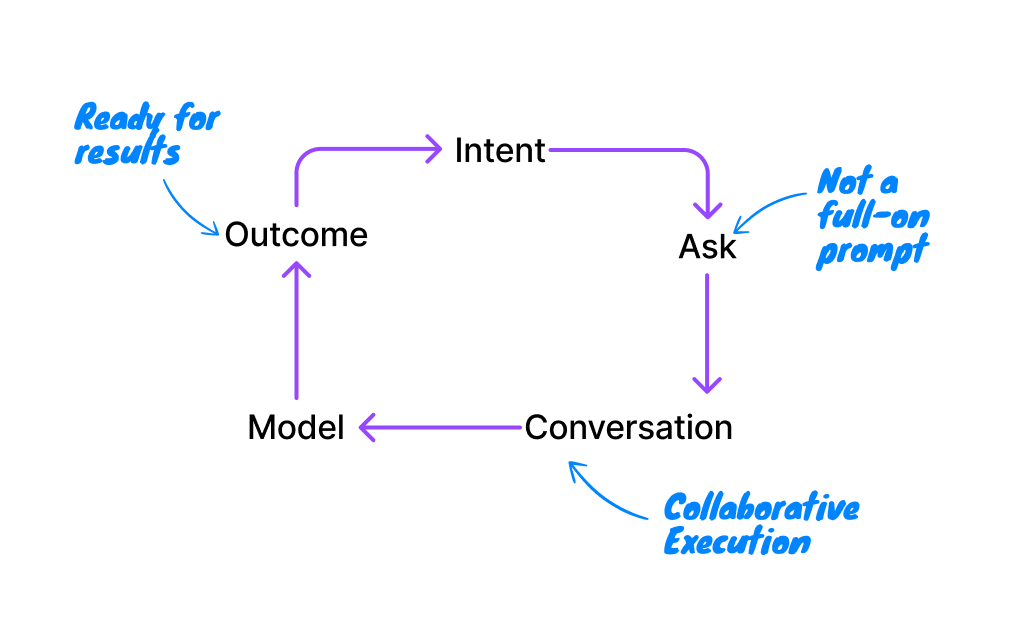

We can think about the Human-AI interaction as the following steps:

Human has a job to be done (intent).

Human communicates the intent as text, voice, image, and/or video (ask).

AI does collaborative execution (conversation).

AI understands the true intent and creates a personalized plan (model).

Human and AI together creates the result (outcome).

Copilot to Autonomous AI

It is not difficult to think about a generation beyond the ones I describe above as something where everything is autonomous and things happen at the speed of thought. But this is anything but far from truly autonomous.

We can talk all about AI augmenting our work, but we will struggle with the efficiency of collaborating with them. Just because these tools learn to do more things better than us, does not mean they will do exactly what we want. The way we work is already changing today, but as we more towards the new computing platforms with AI-led experiences, humans are still in the driver seat and AI will continue to need the right guidance for quite some time.

For now, we focus on working collaboratively with multimodal AI-led experiences that enable us to be more efficient and achieve better outcomes.

Hope you liked what you read! If you enjoyed this letter, please share it with your friends interested in AI ✌️